AI-guided care spans from space to the Sonoran Desert

ASU assistant professors Pooyan Fazli (left) and Hasti Seifi work with a virtual reality headset on a video production stage. The ASU researchers have received a grant from NASA to develop a medical assistant powered by artificial intelligence that can provide urgently needed instructions via an augmented reality headset to users in remote areas. Photo by Erika Gronek/ASU

Picture yourself on a future mission to Mars. You’re floating in zero gravity, tools strapped to your suit, when a crewmate slices open their arm while repairing equipment. On Earth, you’d dial 911. In orbit, you’d radio Houston. But on Mars, help is millions of miles away. The signal takes more than 20 minutes to travel one way, and then another 20 minutes for mission control to respond. Nearly an hour might pass before you hear back. That’s time you simply don’t have when an astronaut is injured.

That scenario might sound like science fiction, but it’s the kind of problem NASA is planning for right now. Astronauts are trained to handle basic medical issues, but they’re not surgeons, and a crisis could demand more than their training covers. What they need is the equivalent of a doctor at the ready, one who can guide them step by step through any emergency.

At Arizona State University, a team of computer scientists believes they have the answer. Backed by new funding from NASA, Hasti Seifi and Pooyan Fazli are developing a lightweight, augmented reality headset that can serve as a just-in-time medical assistant.

Seifi, an assistant professor of computer science and engineering in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at ASU, says the new project builds on past successful work.

“We realized our research on question-answering systems could be incredibly useful in space medicine,” Seifi says. “If something happens mid-procedure, nonmedical experts need quick, accurate answers. Waiting 20 minutes is simply not practical.”

Smart glasses with answers on demand

The device itself is planned to look more like a stylish pair of sunglasses than the traditional virtual reality headsets most people picture.

When worn by an astronaut, the glasses will capture what the wearer sees, listen for spoken questions and instantly provide an answer drawn from a carefully vetted medical knowledge base.

That answer arrives as both sound and sight. A calm voice might talk the user through bandaging a wound or stabilizing a fracture. At the same time, visual overlays appear directly in the astronaut’s field of view, highlighting the right spot to apply pressure or playing a short video clip showing the next step.

Fazli, an assistant professor in The GAME School, points out that this project builds on years of work applying AI to accessibility. He has led teams that have designed question-answering systems and assistive robotics for blind and low-vision users, as well as older adults. Now, he and Seifi are taking that expertise to the most unforgiving setting imaginable: deep space.

Perhaps most importantly, the headset doesn’t require internet access. Every part of the system runs offline, a necessity for space exploration, but also a potential game changer for communities on Earth.

The researchers expect to have their first prototype ready in December. In the months that follow, they’ll test it on simulated injuries in the lab, including experiments with medical mannequins. If successful, astronauts may one day trial the system inside NASA’s training centers, refining it further before it ever leaves the planet.

From orbit to Arizona: rural applications

As thrilling as the space application is, the researchers are just as passionate about how the technology could change lives closer to home. Arizona’s rural communities face persistent shortages of doctors and nurses. In some areas, patients may wait hours for professional medical care. These delays can prove fatal in emergencies.

“We’re already working on adapting this AR system for rural areas in Arizona,” Fazli says. “Community health workers, family caregivers or even nonexperts could use it to handle simple procedures until help arrives. That’s not feasible right now when the nearest professional care might be hours away.”

Because the headset is designed to work without internet connectivity, it provides step-by-step medical guidance in places where broadband is unreliable or nonexistent.

From remote ranches to tribal lands, the glasses could act as a lifeline, offering instructions in those critical moments before trained medical personnel arrive.

The team is preparing plans for Arizona stakeholders to expand testing in local communities, with the hope that what starts as a NASA experiment will soon benefit people in our own state.

A proven track record of innovation

This is not the first time Seifi and Fazli have pushed technology into new frontiers. Seifi, an expert in haptics, was awarded a 2024 Faculty Early Career Development (CAREER) Award from the U.S. National Science Foundation, or NSF, for her work on programmable touch technologies. Her lab explores how vibrations, force and friction can make digital tools more accessible, with applications ranging from medical devices to safer navigation systems in cars. She has long been focused on designing interfaces that feel natural and inclusive.

Fazli, meanwhile, specializes in human-robot interaction, vision and language, multimodal learning and video understanding. His research explores how AI can collaborate with people in meaningful ways to advance social good. He has received major funding from the National Institutes of Health, the NSF and Google, and has co-led outreach programs like AI+X, which encourages underserved Arizona students to explore careers in artificial intelligence.

Together, they bring a blend of technical expertise and a commitment to human-centered design. If their AR medical assistant can help an astronaut in orbit perform a procedure under pressure, it could also help a rancher in northern Arizona care for a neighbor while waiting for an ambulance or medical helicopter.

The months ahead promise to be busy. The ASU team will work with NASA program managers to determine which medical scenarios should be prioritized, from wound care to more complex procedures. By December, they expect to have a working prototype ready for demonstrations, with further development and testing to follow in 2026.

“The exciting thing is that it works in both directions,” Seifi says. “The challenges of space pushed us to innovate, but the benefits come right back to Earth.”

Tomorrow’s emergency room, today

Space medicine might seem like a niche concern, but the solutions it inspires have far-reaching implications. But by putting an AI-powered medical assistant inside a pair of glasses, ASU researchers are creating a tool that can guide anyone, from astronauts to rural community workers, through lifesaving steps when every second counts.

If the project succeeds, the same device that keeps astronauts alive millions of miles from home may one day save lives in remote Arizona.

That’s the extraordinary promise of research that bridges the final frontier and the open frontier.

More Science and technology

New research by ASU paleoanthropologists: 2 ancient human ancestors were neighbors

In 2009, scientists found eight bones from the foot of an ancient human ancestor within layers of million-year-old sediment in the Afar Rift in Ethiopia. The team, led by Arizona State University…

When facts aren’t enough

In the age of viral headlines and endless scrolling, misinformation travels faster than the truth. Even careful readers can be swayed by stories that sound factual but twist logic in subtle ways that…

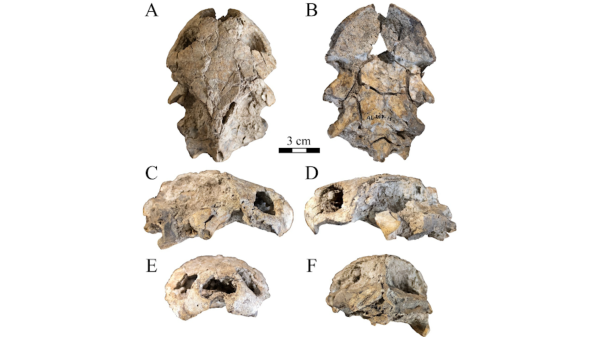

Scientists discover new turtle that lived alongside 'Lucy' species

Shell pieces and a rare skull of a 3-million-year-old freshwater turtle are providing scientists at Arizona State University with new insight into what the environment was like when Australopithecus…